The Complete Guide to Automatic Speech Recognition(ASR)

98% accurate, real-time transcription in just a few clicks. 58 languages and multiple platforms supported.

Automatic speech recognition, often referred to as ASR, transforms spoken language into text. It's speech-to-text software that converts your voice into written language automatically.

This technology has many applications, including dictation and visual voicemail software.

In this article, we'll discuss how automatic speech recognition technology works. We'll also talk about how it has evolved and what you can do with it today.

What is automatic speech recognition (ASR)?

ASR recognizes, understands, and translates spoken words into text.

It's one of technology's most widely used features. Think about how often you've said Ok Google, Hey Siri, or opened an app on your phone with your voice alone.

This feature has become so widespread that consumers don't even notice it anymore. That doesn't mean they're immune to its usefulness. ASR is good at helping people speak through a device that translates their words into text.

Brief history of ASR

Automatic speech recognition has long been the domain of science fiction. Even in today's world of Siri and Alexa, many people still don't know how it works or why it took so long to develop.

ASR (Automatic Speech Recognition) first emerged in 1952. Bell Labs created a program called Audrey. They designed the program to transcribe spoken numbers, but it only recognized ten phrases.

ASR researchers focused on developing systems capable of transcribing conversations. These new approaches included direct transcription. Researchers manually assigned words to parts of speech. They also used broad-coverage strategies in which computers listened to a wide range of speakers.

Although both successfully recognized isolated words, neither could reliably understand entire sentences. Researchers adapted their techniques in different environments, including remote listening stations.

Hidden Markov Models

The next breakthrough happened in the mid-1970s when researchers used HMM for speech recognition. The idea is to represent each word as a sequence of hidden states.

Unfortunately, the computational complexity is too big for recognizing small vocabulary from speech signals. To speed up the recognition process, researchers further refined the HMM. They added more states and used backward sampling for sampling distributions.

Neural Networks

A lot of the current speech recognition uses neural networks. They were first introduced in 1980 and modeled after how animal brains process stimuli.

The theory was that computer scientists could develop a neural network by connecting artificial neurons. They can then emulate how real neurons connect.

Even today, some of the most advanced speech recognition programs are still reliant on neural networks. There are plenty of technical details about them (including how they work and why they work). Neural networks allow computers to learn from experience rather than simply program instructions.

Deep Learning

Deep learning is now revolutionizing systems that used to be extremely difficult to build due to their high complexity. Deep learning can learn how to recognize sound patterns without having to be explicitly programmed. It enables computers and smartphones to interpret what we say more accurately than before.

How does automatic speech recognition work?

There are two parts to automatic speech recognition:

Recognizing phonemes and words

Responding appropriately

The first step involves the computer identifying phonemes. These are the slightest sounds humans can make with their voices. The machine runs your voice through a stenograph as you speak into a microphone. This tool recognizes the phonemes in your voice.

It then uses Natual Language Processing (NLP) to translate phonemes into readable text. It does that by comparing those recordings against databases of stored transcriptions.

Sometimes these are generated by actual humans. Sometimes they are compared against a neural network or deep learning algorithm.

The system will then make corrections as needed.

Components of an ASR system

There are four main components of an automatic speech recognition system. These include:

Feature Extraction

Acoustic Modeling

Language Model

Classification/Scoring

Each plays an essential role in helping computers understand our words and actions.

Feature extraction

Feature extraction extracts features from audio recordings. Think of features as word fingerprints that help identify spoken words. They identify specific characteristics such as pitch, volume, and accent.

Acoustic modeling

This model turns extracted features into a statistical parametric speech model. It will then compare against other models based on likelihood ratios.

Language model

A language model helps the machine determine which word sequences are possible. It uses grammar rules and probabilities for certain sounds occurring together within sentences.

Classification and scoring

A fancy term that means we've taken everything above and determined whether or not it was correct.

If not, try again until you get it right. Once a system has gotten to that point, it will read through your data. It will extract features and make comparisons between models. Then it will decide on a final result.

This process will repeat many times per second. Once it's reached an acceptable level of accuracy, it will move on.

What is natural language processing (NLP)?

NLP focuses on helping computer programs interpret the meaning of words and sentences. It allows computers to match phonemes from human speech and convert it into text. NLP combines with ASR to interpret and execute spoken commands.

How does NLP work?

NLP deciphers natural language structure and grammatical guidelines. We can break down NLP into two distinct components:

Natural Language Understanding (NLU)

Natural Language Generation (NLG)

NLU focuses on automatically understanding human speech. NLG helps you create text that reads as a human wrote it.

NLU identifies aspects of sentences based on syntax, semantics, and pragmatics. It searches for keywords or qualifying content using probability. Then it can understand the context and differentiate between entities, concepts, or actions.

NLG uses computational linguistics to produce readable text. It makes data-driven decisions during sentence construction. NLG may also write phrases that meet specific user objectives without compromising quality.

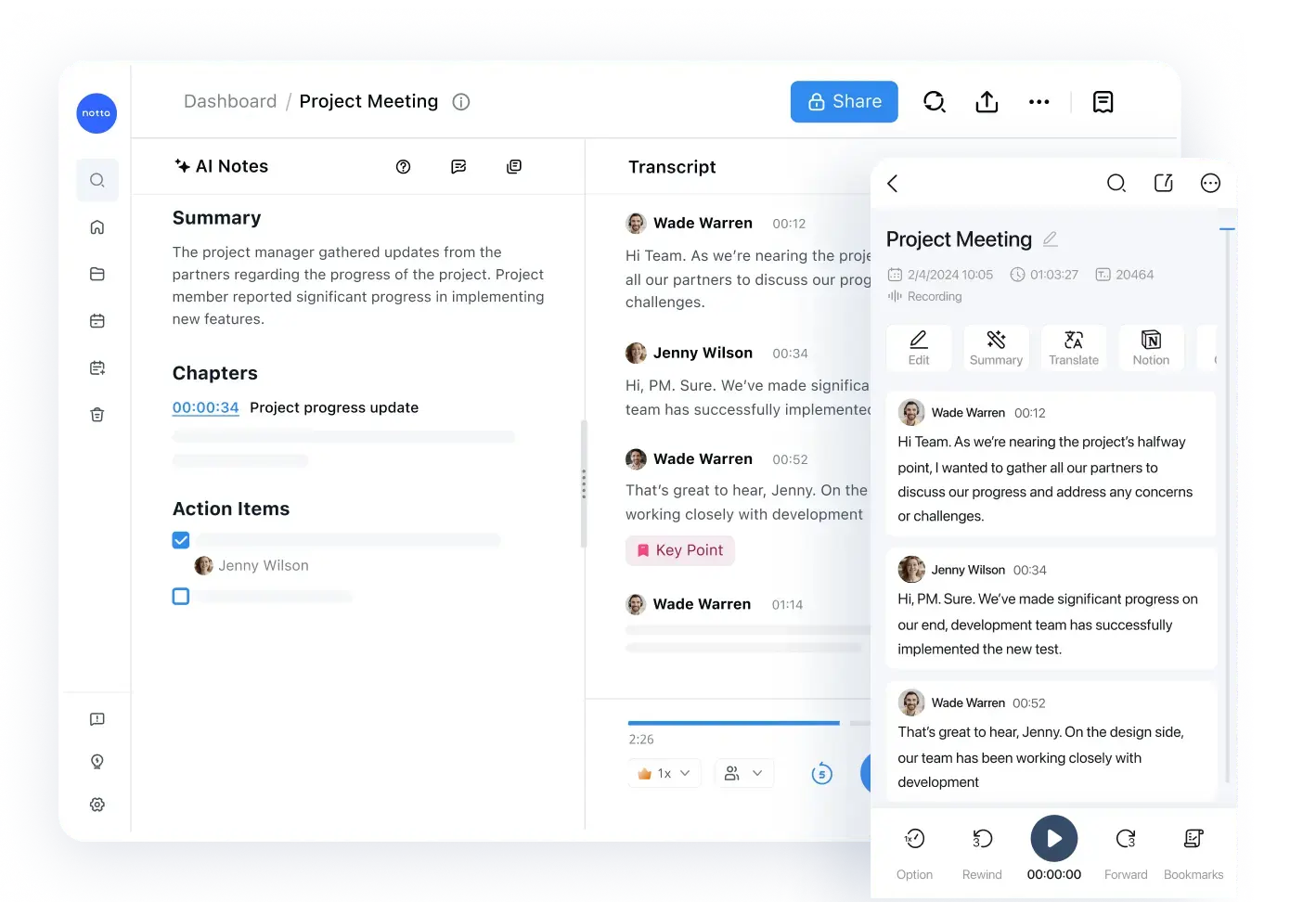

Notta can convert your spoken interviews and conversations into text with 98.86% accuracy in minutes. Focus on conversations, not manual note-taking.

ASR models, methods, and algorithms

Here we'll get you up to speed on some commonly used ASR models.

Hidden Markov Models (HMM)

An HMM is a statistical model. It maps the probability of random variables into a known variable. These observations can be acoustic signals representing words spoken by a person.

Developers use software to train HMMs on speech samples. They then use these models to recognize new speech patterns in real-time.

An HMM works by assigning states that can change over time. Then it uses hidden variables that determine these transitions. Each word has its own HMM model with the probability of being next based on previous states.

Each word has its own HMM with specific probabilities attached. Looking at past sequences of words together can predict which word is next.

Neural Networks

Image and speech recognition technology often uses artificial neural networks. This form of machine learning has become popular with large tech companies.

An ANN is a series of connected nodes that feeds an input signal and adjusts their output based on prior inputs.

Neural networks learn a function from examples. You train them by feeding them a massive set of example data. Then they know which aspects of that data are relevant to their task. Finally, they apply those findings back to new data.

Developers train neural networks by listening to humans say particular words. Then they can create models for how we speak.

They can determine which word to use based on how we typically talk.

Deep Learning

Deep learning, in short, is a form of machine learning. It uses many layers of artificial neural networks. It has now advanced so far that computers can have more than 20 layers of neural networks.

A biological neural network such as the human brain consists of neurons and synapses. These specialized cells send electrochemical signals from one cell to another.

As a message passes through a neural network, it picks up characteristics from each one. It is receiving input from them like how we humans receive information.

Deep learning models learn how neural networks work together with enough input data. It can then calculate how these groups behave over time.

Advantages of speech recognition

Speech recognition has many advantages. Significant advantages include:

Increased productivity

Real-time voice to text

It's much faster than human typing

Increased productivity

Speech recognition technology saves time by eliminating a step in completing tasks. An example is if you're typing an email or a report but suddenly have to leave your desk and don't have time to finish it. You can use ASR software to dictate your notes instead of writing them out longhand.

Captures speech faster than humans Type

By nature, people speak much faster than they can type. When you use an app or software that takes advantage of ASR, it doesn't have to wait for you to finish typing. The software captures and understands your speech as you go. It results in more accurate transcription in less time.

Voice-to-text in real-time

Speech recognition software is constantly getting better. With voice recognition apps such as Notta, you can quickly transcribe entire meetings. It saves time, it's highly accurate, and you never have to worry about retaking notes.

Disadvantages of speech recognition

Suppose you've ever used voice recognition software. In that case, you know that it can hit or miss in terms of accuracy and efficiency. If you're considering using automatic speech recognition, this technology has some disadvantages.

Training takes time

ASR does take a bit more effort than implementing other types of machine learning. You may need to devote some real human time for training purposes. However, after the initial setup, ASR is easy to scale using neural networks and deep learning.

There are vocal and language differences among populations

Automatic speech recognition has to account for how different groups speak. Suppose you're designing software that needs to accommodate accents and dialects. In that case, you should plan on testing it in real-world scenarios with your target market.

Data security concerns with neural networks & deep learning

Developers are constantly tweaking and improving neural networks. That also means people are regularly using them in different ways.

Neural networks learn from vast amounts of data, and their potential for misuse is also huge. Those working with this must watch how people can misuse their technology.

ASR applications

You can talk into your smartphone and use your voice for many features. You can dictate text messages or Facebook updates. You can send emails, turn on airplane mode or find a friend in your contacts list.

Today, smartphones have made our lives easier by allowing us to do more tasks on the go than ever before. They also let us take better notes and memos. Apps like Notta help you remember key points of any conversation.

No longer are we limited by pen and paper or a single notebook when noting important information. Instead, we can now seamlessly record everything in real-time using ASR technology.

Manual operations were once necessary during all these steps of automatic speech recognition. Today machines can do most or all of them without any human interaction. There are many different problems developers can solve using automated speech recognition technology.

Some examples include:

Call routing

Call queuing

Dictation systems (like Siri)

Language translation services (like Google Translate)

Audio transcription (turning audio into text)

Voice search

These applications aren't just limited to mobile devices anymore.

You can now find them in vehicles like cars and airplanes. Even smart home appliances like televisions and thermostats are using ASR.

It's not just large companies using ASR technology either. Anyone can download software from third-party vendors or find open-source solutions online!

It makes automated speech recognition accessible to everyone. You don't need to have a ton of technical knowledge.

Use Notta's AI transcription tool to quickly and easily transcribe audio and videos. We guarantee accuracy and ease of use.

FAQs

Is ASR part of NLP?

People often confuse speech recognition (ASR) with natural language processing (NLP). They're similar but slightly different areas of computer science.

ASR focuses on the fundamental aspect of converting speech to text. It begins with analyzing vocal information to create phonemes. NLP is a subset of ASR that processes the phonemes to form a sentence.

The two work together in synchrony. NLP can tell an ASR engine what its focus should be. An ASR engine can give NLP engines context to understand the meaning behind words.

What are the types of speech recognition?

Some different types of speech recognition include:

Natural Language

Discrete

Continuous

Speaker Dependent

Speaker Independent

Each variation has its own set of strengths and weaknesses. A proper understanding of one of these forms requires a basic understanding of how they all work.

Is NLP AI or ML?

NLP is a branch of artificial intelligence. It makes it possible for machines to understand human language. It also falls under machine learning, alongside deep learning and neural networks.

NLP allows machines to identify patterns and relationships in large volumes of speech. This technology is an essential ingredient for automating processes based on language alone. These include data mining, natural language generation, speech recognition, translation, and chatbots.

Final thoughts

Automated Speech Recognition is the cornerstone of technology we use in Notta. Our powerful software employs the most advanced natural language processing algorithms. We design these algorithms to transcribe voice to text with very high accuracy.