13 Best Free Speech-to-Text Open Source Engines, APIs, and AI Models

98% accurate, real-time transcription in just a few clicks. 58 languages and multiple platforms supported.

Automatic speech-to-text recognition involves converting an audio file to editable text. Computer algorithms facilitate this process in four steps: analyze the audio, break it down into parts, convert it into a computer-readable format, and use the algorithm again to match it into a text-readable format.

In the past, this was a task only reserved for proprietary systems. This was disadvantageous to the user due to high licensing and usage fees, limited features, and a lack of transparency.

As more people researched these tools, creating your language processing models with the help of open-source voice recognition systems became possible. These systems, made by the community for the community, are easy to customize, cheap to use, and transparent, giving the user control over their data.

Best 13 open-source speech recognition systems

An open-source speech recognition system is a library or framework consisting of the source code of a speech recognition system. These community-based projects are made available to the public under an open-source license. Users can contribute to these tools, customize them, or even tailor them to their needs.

Here are the top open-source speech recognition engines you can start on:

1. Whisper

Whisper is Open AI’s newest brainchild that offers transcription and translation services. Released in September 2022, this AI tool is one of the most accurate automatic speech recognition models. It stands out from the rest of the tools in the market due to the large number of training data sets it was trained on: 680 thousand hours of audio files from the internet. This diverse range of data improves the human-level robustness of the tool.

You must install Python or the command line interface to transcribe using Whisper. Five models are available to work with; all have different sizes and capabilities. These include tiny, base, small, medium, and large. The larger the model, the faster the transcription speed. Still, you must invest in a good CPU and GPU device to maximize their use.

Whisper AI falls short compared to models proficient in LibriSpeech performance (one of the most common speech recognition benchmarks). However, its zero-shot performance reveals that the API has 50% fewer errors than the same models.

Pros

It supports content formats such as MP3, MP4, M4A, Mpeg, MPGA, WEBM, and WAV.

It can transcribe 99 languages and translate them all into English.

The tool is free to use.

Cons

The larger the model, the more GPU resources it consumes, which can be costly.

It will cost you time and resources to install and use the tool.

It does not provide real-time transcription.\

With Notta's AI transcription tool you can upload pre-recorded audios or transcribe in real time. We support 58 transcription languages and multiple platforms.

2. Project DeepSpeech

Project DeepSearch is an open-source speech-to-text engine by Mozilla. This voice-to-text command and library is released under the Mozilla Public License (MPL). Its model follows the Baidu Deep Speech research paper, making it end-to-end trainable and capable of transcribing audio in several languages. It is also trained and implemented using Google’s TensorFlow.

Download the source code from GitHub and install it in your Python to use it. The tool comes when already pre-trained on an English model. However, you can still train the model with your data. Alternatively, you can get a pre-trained model and improve it using custom data.

Pros

DeepSpeech is easy to customize since it’s a code-native solution.

It provides special wrappers for Python, C, .Net Framework, and Javascript, allowing you to use the tool regardless of the language.

It can function on various gadgets, including a Raspberry Pi device.

Its per-word error rate is remarkably low at 7.5%.

Mozilla takes a serious approach to privacy concerns.

Cons

Mozilla is reportedly ending the development of DeepSpeech. This means there will be less support in case of bugs and implementation problems.

3. Kaldi

Kaldi is a speech recognition tool purposely created for speech recognition researchers. It’s written in C++ and released under the Apache 2.0 license, one of the least restrictive licenses. Unlike tools like Whisper and DeepSpeech, which focus on deep learning, Kaldi primarily focuses on speech recognition models that use old-school, reliable tools. These include models like HMMs (Hidden Markov Models), GMMs (Gaussian Mixture Models), and FSTs (Finite State Transducers.)

Pros

Kaldi is very reliable. Its code is thoroughly tested and verified.

Although its focus is not on deep learning, it has some models that can help with transcription services.

It is perfect for academic and industry-related research, allowing users to test their models and techniques.

It has an active forum that provides the right amount of support.

There are also resources and documentation available to help users address any issues.

Being open-source, users with privacy or security concerns can inspect the code to understand how it works.

Cons

Its classical approach to models may limit its accuracy levels.

Kaldi is not user-friendly since it operates on a Command-line interface.

It's pretty complex to use, making it suitable for users with technical experience.

You need lots of computation power to use the toolkit.

4. SpeechBrain

SpeechBrain is an open-source toolkit that facilitates the research and development of speech-related tech. It supports a variety of tasks, including speech recognition, enhancement, separation, speaker diarization, and microphone signal processing. Speechbrain uses PyTorch as its foundation, taking advantage of its flexibility and ease of use. Developers and researchers can also benefit from Pytorch’s expensive ecosystem and support to build and train their neural networks.

Pros

Users can choose between both traditional and deep-leaning-based ASR models.

It's easy to customize a model to adapt to your needs.

Its integration with Pytorch makes it easier to use.

There are available pre-trained models users can use to get started with speech-to-text tasks.

Cons

The SpeechBrain documentation is not as extensive as that of Kaldi.

Its pre-trained models are limited.

You may need particular expertise to use the tool. Without it, you may need to undergo a steep learning curve.

5. Coqui

Coqui is an advanced deep learning toolkit perfect for training and deploying stt models. Licensed under the Mozilla Public License 2.0, you can use it to generate multiple transcripts, each with a confidence score. It provides pre-trained models alongside example audio files you can use to test the engine and help with further fine-tuning. Moreover, it has well-detailed documentation and resources that can help you use and solve any arising problems.

Pros

The STT models it provides are highly trained with high-quality data.

The models support multiple languages.

There is a friendly support community where you can ask questions and get any details relating to STT.

It supports real-time transcription with extremely low latency in seconds.

Developers can customize the models to various use cases, from transcription to acting as voice assistants.

Cons

Coqui stopped to maintain the STT project to focus on their text-to-speech toolkit. This means you may have to solve any problems that arise by yourself without any help from support.

6. Julius

Julius is one of the oldest speech-to-text projects, dating back to 1997, with roots in Japan. It is available under the BSD -3-license, making it accessible to developers. It strongly supports Japanese ASR, but being a language-independent program, the model can understand and process multiple languages, including English, Slovenian, French, Thai, and others. The transcription accuracy largely depends on whether you have the right language and acoustic model. The project is written in the most common language, C, allowing it to work in Windows, Linux, Android, and macOS systems.

Pros

Julius can perform real-time speech-to-text transcription with low memory usage.

It has an active community that can help with ASR problems.

The models trained in English are readily available on the web for download.

It does not need internet access for speech recognition, making it suitable for users needing privacy.

Cons

Like any other open-source program, you need users with technical experience to make it work.

It has a huge learning curve.

7. Flashlight ASR (Formerly Wav2Letter++)

Flashlight ASR is an open-source speech recognition toolkit designed by the Facebook AI research team. Its capability to handle large datasets, speed, and efficiency stands out. You can attribute the speed to using only convolutional neural networks in language modeling, machine translation, and speech synthesis.

Ideally, most speech recognition engines use convolutionary and recurrent neural networks to understand and model the language. However, recurrent networks may need high computation power, thus affecting the speed of the engine.

The Flashlight ASR is compiled using modern C++, an easy language on your device’s CPU and GPU. It’s also built on Flashlight, a stand-alone library for machine learning.

Pros

It's one of the fastest machine-learning speech-to-text systems.

You can adapt its use to various languages and dialects.

The model does not consume a lot of GPU and CPU resources.

Cons

It does not provide any pre-trained language models, including English.

You need to have deep coding expertise to operate the tool.

It has a steep learning curve for new users.

8. PaddleSpeech (Formerly DeepSpeech2)

This open-source speech-to-text toolkit is available on the Paddlepaddle platform and provided under the Apache 2.0 license. PaddleSpeech is one of the most versatile toolkits capable of performing speech recognition, speech-to-text conversion, keyword spotting, translation, and audio classification. Its transcription quality is so good that it won the NAACL2022 Best Demo Award.

This speech-to-text engine supports various language models but prioritizes Chinese and English models. The Chinese model, in particular, features text normalization and pronunciation to make it adapt to the rules of the Chinese language.

Pros

The toolkit delivers high-end and ultra-lightweight models that use the best technology in the market.

The speech-to-text engine provides both command-line and server options, making it user-friendly to adopt.

It is very convenient for users by both developers and researchers.

Its source code is written in Python, one of the most commonly used languages.

Cons

Its focus on Chinese leads to the limitation of resources and support for other languages.

It has a steep learning curve.

You need to have certain expertise to integrate and use the tool.

9. OpenSeq2Seq

Like its name, OpenSeq2Seq is an open-source speech-to-text tool kit that helps train different types of sequence-to-sequence models. Developed by Nvidia, this toolkit is released under the Apache 2.0 license, meaning it's free for everyone. It trains language models that perform transcription, translation, automatic speech recognition, and sentiment analysis tasks.

To use it, use the default models or train your own, depending on your needs. OpenSeq2Seq performs best when you use many graphics cards and computers simultaneously. It works best on Nvidia-powered devices.

Pros

The tool has multiple functions, making it very versatile.

It can work with the most recent Python, TensorFlow, and CUDA versions.

Developers and researchers can access the tool, collaborate, and make their own innovations.

Beneficial to users with Nvidia-powered devices.

Cons

It can consume significant computer resources due to its parallel processing capability.

Community support has reduced over time as Nvidia paused the project development.

Users without access to Nvidia hardware can be at a disadvantage.

10. Vosk

One of the most compact and lightweight speech-to-text engines today is Vosk. This open-source toolkit works offline on multiple devices, including Android, iOS, and Raspberry Pi. It supports over 20 languages and dialects, including English, Chinese, Portuguese, Polish and German.

Vosk provides users with small language models that do not take up much space. Ideally, around 50MB. However, a few large models can take up to 1.4GB. The tool is quick to respond and can convert speech to text continuously.

Pros

It can work with various programming languages such as Java, Python, C++, Kotlyn, and Shell, making it a versatile addition for developers.

It has various use cases, from transcriptions to developing chatbots and virtual assistants.

It has a fast response time.

Cons

The engine's accuracy can vary depending on the language and accent.

You need coding expertise to integrate and use the tool.

11. Athena

Athena is another sequence-to-sequence-based speech-to-text open-source engine released under the Apache 2.0 license. This toolkit suits researchers and developers with their end-to-end speech processing needs. Some tasks the models can handle include automatic speech recognition (ASR), speech synthesis, voice detection, and keyword spotting. All the language models are implemented on TensorFlow, making the toolkit accessible to more developers.

Pros

Athena is versatile in its use, from transcription services to speech synthesis.

It does not depend on Kaldi since it has its pythonic feature extractor.

The tool is well maintained with regular updates and new features.

It is open source, free to use, and available to various users.

Cons

It has a deep learning curve for new users.

Although it has a WeChat group for community support, it limits the accessibility to only those who can access the platform.

12. ESPnet

ESPnet is an open-source speech-to-text software released under the Apache 2.0 license. It provides end-to-end speech processing capabilities that cover tasks ranging from ASR, translation, speech synthesis, enhancement, and diarization. The toolkit stands out for leveraging Pytorch as its deep learning framework and following the Kaldi data processing style. As a result, you get comprehensive recipes for various language-processing tasks. The tool is also multi-lingual as it is capable of handling various languages. Use it with the readily available pre-trained models or create your own according to your needs.

Pros

The toolkit delivers a stand-out performance compared to other speech-to-text software.

It can process audio in real time, making it suitable for live transcription services.

Suitable for use by researchers and developers.

It is one of the most versatile tools to deliver various speech-processing tasks.

Cons

It can be complex to integrate and use for new users.

You must be familiar with Pytorch and Python to run the toolkit.

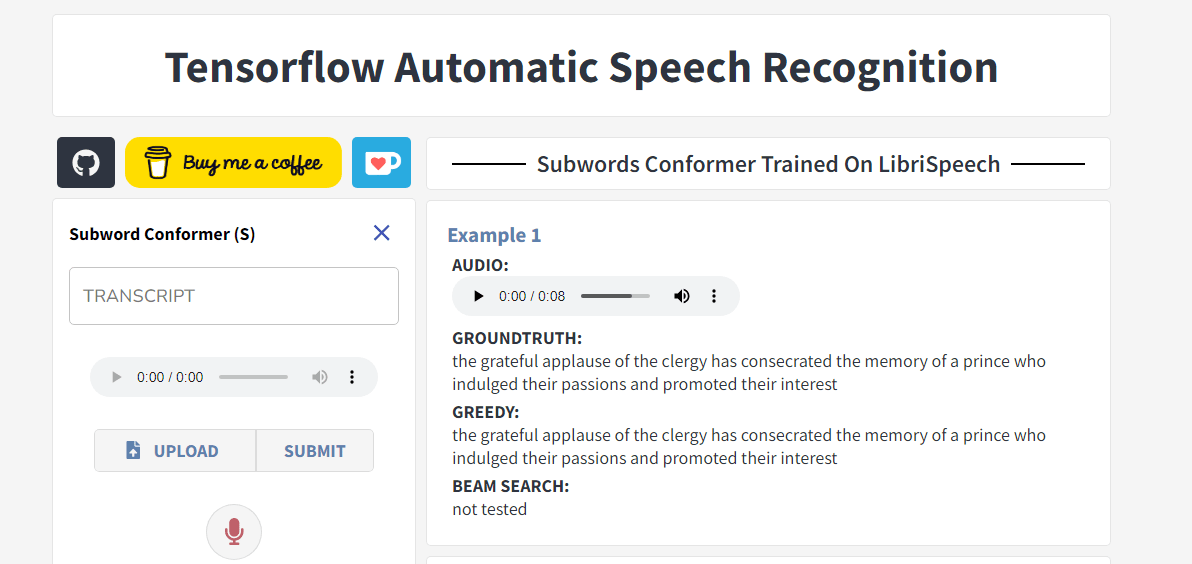

13. Tensorflow ASR

Our last feature on this list of free speech-to-text open-source engines is the Tensorflow ASR. This GitHub project is released under the Apache 2.0 license and uses Tensorflow 2.0 as the deep learning framework to implement various speech processing models.

Tensorflow has an incredible accuracy rate, with the author claiming it to be an almost ‘state-of-the-art’ model. It’s also one of the most well-maintained tools that undergo regular updates to improve its functionality. For example, the toolkit now supports language training on TPUs (a special hardware).

Tensorflow also supports using specific models such as Conformer, ContextNet, DeepSpeech2, and Jasper. You can choose the tool depending on the tasks you intend to handle. For example, for general tasks, consider DeepSpeech2, but for precision, use Conformer.

Pros

The language models are accurate and highly efficient when processing speech-to-text.

You can convert the models to a TFlite format to make it lightweight and easy to deploy.

It can deliver on various speech-to-text-related tasks.

It Supports multiple languages and provides pre-trained English, Vietnamese, and German models.

Cons

The installation process can be quite complex for beginners. Users need to have a particular expertise.

There is a learning curve to using advanced models.

TPUs do not allow testing, limiting the tool's capabilities.

Convert speech to text in a few clicks with Notta's free audio to text converter. Accuracy guaranteed.

Top 3 speech-to-text APIs and AI models

A speech-to-text API and AI model is a tech solution that helps users convert their speech or audio files into text. Most of these solutions are cloud-based. You need to access the internet and make an API request to use them. The decision to use either APIs, AI models, or open-source engines largely depends on your needs. An API or AI model is the most preferred for small-scale tasks that are needed quickly. However, for large-scale use, consider using an open-source engine.

Several other differences exist between speech-to-text APIs/AI models and open-source engines. Let's take a look at the most common in the table below:

| Aspect | Speech-to-Text APIs/AI Models | Open-Source Engines |

|---|---|---|

| Licensing Model | Commercial or Free (limited usage) | Open source (usually free) |

| Accessibility | Mostly cloud-based | Typically on-premises |

| Deployment | Quick and easy integration | Requires setup and configuration |

| Scalability | Highly scalable | Scalability depends on the infrastructure |

| Maintenance | The provider manages the tool and provides support when needed. | Self-managed or community-supported. |

| Customization | Comes with a lot more out-of-the-box features. | Allows customization to some extent. |

| Integration with Cloud Services | May offer cloud integration services | Typically standalone. |

| Data Security and Privacy | Compliance depends on the service provider. | You get more control over your data security and privacy. |

| Cost Model | Pay-as-you-go or subscription-based platform | Open-source tools are free to use. |

| Pre-Trained Models Availability | Available, often with various languages and accents. | You can use the available models or train them from scratch. |

| Community and Support | Vendor support and documentation are available. | Community and documentation are available. |

| Use Cases | Quick prototyping, integration, and scalable applications. | Use it on projects with specific customization needs. |

| Accessibility Over the Internet | Yes. | It may not require internet access. |

After considerable research, here are our top three speech-to-text API and AI models:

1. Google

The Google Cloud Speech-to-text API is one of the most common speech recognition technologies for developers looking to integrate the service into their applications. It automatically detects and converts audio to text using neural network models. Initially, the purpose of this toolkit was for use on Google’s home voice assistant, as its focus is on short command and response applications. Although the accuracy level is not that high, it does an excellent job of transcribing with minimal errors. However, the quality of the transcript is dependent on the audio quality.

Google Cloud speech-to-text API uses a pay-as-you-go subscription, priced according to the number of audio files processed per month measured per second. Users get 60 free transcription minutes plus Google Cloud hosting credits worth $300 for the first 90 days. Any audio over 60 minutes will cost you an additional $0.006 per 15 seconds.

Pros

The API can transcribe more than 125 languages and variants.

You can deploy the tool in the cloud and on-premise.

It provides automatic language transcription and translation services.

You can configure it to transcribe your phone and video conversations.

Cons

It is not free to use.

It has a limited vocabulary builder.

2. AWS Transcribe

AWS transcribe is an on-demand voice-to-text API allowing users to generate audio transcriptions. If you have heard of the Alexa voice assistant, it's the tool behind the development. Unlike every other consumer-oriented transcription tool, the AWS API has a daily good accuracy level. It can also distinguish voices in a conversation and provide timestamps to the transcript. This tool supports 37 languages, including English, German, Hebrew, Japanese, and Turkish.

Pros

Integrating it into an existing AWS ecosystem is effortless.

It is one of the best short audio commands and response options.

It is highly scalable.

It has a reasonably good accuracy level.

Cons

It is expensive to use.

It only supports cloud deployment.

It has limited support.

The tool can be slow at times.

3. AssemblyAI

AssemblyAI API is one of the best solutions for users looking to transcribe speech without many technical terms, jargon, or accents. This API model automatically detects audio, transcribes it, and even creates a summary. It also provides services such as speaker diarization, sentiment analysis, topic detection, content moderation, and entity detection.

AssemblyAI has a simple and open pricing model, where you pay for only what you use. For example, you may need to pay $0.650016 per hour to get the core transcription service, while real-time transcription costs $0.75024 per hour.

Pros

It is not expensive to use.

Accuracy levels are high for not-technical languages.

It provides helpful documentation.

The toolkit is easy to set up, even for beginners.

Cons

Its deployment speed is slow.

Its accuracy levels drop when dealing with technical terms.

What is the best open-source speech recognition system?

As you can see above, every tool from this list has benefits and disadvantages. Choosing the best open-source speech recognition system depends on your needs and available resources. For example, if you are looking for a lightweight toolkit compatible with almost every device, Voskand Julius beat the rest of the tools in this list. You can use them on Android, iOS, and even Raspberry Pi. Moreover, they don’t consume much space.

For users who want to train their models, you can use toolkits such as Whisper, OpenSeq2Seq, Flashlight ASR, and Athena.

The best approach to choosing an open-source voice recognition software is to review its documentation to understand the necessary resources and test it to see if it works for your case.

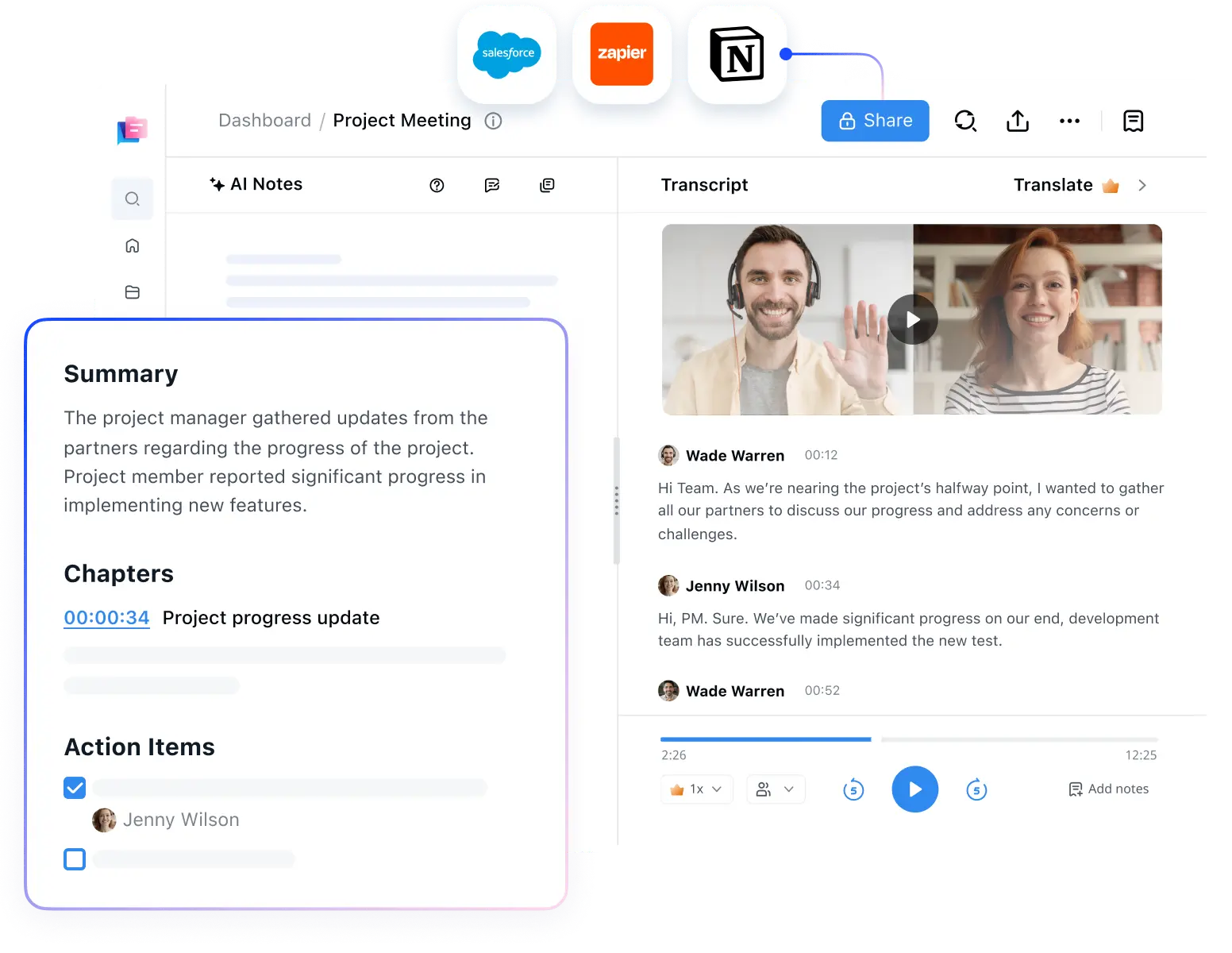

Introducing Notta AI transcription tool

As shown above, AI models differ from open-source engines. They are fast, more efficient, easy to use, and can deliver high accuracy. Moreover, their use is not only limited to users with experience. Anyone can operate the tools and generate transcripts in minutes.

Here is where we come in. Notta is one of the leading speech-to-text AI models that can transcribe and summarize your audio and video recordings. This AI tool supports 58 languages and can deliver transcripts with an impressive accuracy rate of 98.86%. The tool is available for use both on mobile and web.

Pros

Notta is easy to set up and use.

It supports multiple video and audio formats.

Its transcription speed is lightning-fast.

It adopts rigorous security protocols to protect user data.

It's free to use.

Cons

There is a limit to the file size you can upload to transcribe.

The free version supports only a limited number of transcriptions per month.

Wrapping up

The advancement of speech recognition technology has been impressive over the years. What was once a world of proprietary software has shifted to one led by open-source toolkits and APIs/AI.

It's too early to say which is the clear winner, as they are all improving. You can, however, take advantage of their services, which include transcription, translation, dictation, speech synthesis, keyword spotting, diarization, and language enhancement.

There is no right or wrong tool in the options above. Every one of them has its strengths and weaknesses. Carefully assess your needs and resources before choosing a tool to make an informed decision.